Mujoco is an awesome simulation tool. You’re probably familiar with it from it’s use in the OpenAI gym, or from it featuring in articles and videos on model predictive control and robots learning to walk research. If you’re not familiar with it, you should check it out! Mujoco provides super fast dynamics simulation with a focus on contact dynamics. It’s especially useful for simulating robotic arms and gripping tasks.

There is a growing model repository, but it’s not unlikely you’re going to want to build your own model. It turns out this is relatively easy in Mujoco. There are two parts to defining a model: 1) The STL files, which are 3D models of the robot components, and 2) the XML file, which specifies the kinematic and dynamic relationships in the model. For STL manipulation, we use the program SketchUp because it is freely available with both offline and online versions.

This post will start by going over some settings in SketchUp assuming you haven’t used it before. We’ll then walk through how to set up the STL files for the individual components of the robot model (assuming that you already have the 3D modeling completed), steps for generating a Mujoco XML robot description file, and go over a couple of the more common problems that come up in the process.

If you don’t have the 3D modeling aleady completed, it’s worth doing a quick search around online to see if someone else has already made it because there are a lot out there not posted officially on any model repo. Github is a good source, and so is https://www.traceparts.com/en. We’re not going to cover any 3D modeling how-to here.

Shout out to my colleague Pawel Jaworski who worked through this process and wrote up the first of this document!

Sketchup Settings – Set model units on import

Before opening your STL, in the open file window select your file and click on the Options button next to Import. Select the units that the model was defined in. If you import your object and cannot see it, it is likely that the units selected during the import were incorrect and the object is simply too small to see.

Skechup Settings – Set model units for export

Mujoco uses the units specified in your STL models. ABR Control uses metres, and things generally are easiest when everyone is using the same units. To change your units to meters, go to Model Info > Length Units > 0.0m.

Sketchup Settings – Measurement precision

Before doing any measuring in SketchUp, change your measurement precision in Model Info > Units. precision to the largest number of digits to make sure you get accurate measurements (which you will need when building the Mujoco XML), the default in the online version is 1 significant digit.

Sketchup Settings – Disable snapping

If you are like me then auto-snapping is often a huge pet peeve. To disable it, go to Model Info > Units and unclick Length snapping and Angle snapping.

Sketchup Settings – Xray mode

It can be helpful to set the default view to xray mode so you can see inside the model when manipulating the components. To do this go to Styles > Default Styles > X-ray on the right hand side.

Generating component STL files

A common starting point for modeling is that you have a 3D model of the full robot. When this is the case, the first step in building a Mujoco model is to generate separate STL files for each of the components of the robot that you want to be able to move independently. For each of these component STL files, we want the point where it connects to the joint to be at the origin (0, 0, 0). We’ve found that this streamlines the process of building the model in the XML and makes it much easier to correctly specify the inertial properties.

If your 3D model is already broken up into each dynamic component (i.e. each arm section that moves independently) then you can skip to the section on centering at the origin.

Exporting components to separate STLs

Make sure you have the model unlocked. To do so, choose the Select tool and highlight the entire model. If the outline is red, it is locked. To unlock it, right click and choose Unlock.

| Locked | Unlocked |

To get each component piece: Select the entire arm, deselect your component with Ctrl + Shift + Click, and then delete the rest. Go to the folder icon in the top left and select Export > STL. When exporting your model, make sure to not save your model in ASCII format, choose Binary. Repeat this until you have exported every part as its own STL.

Positioning your object at the origin

For each component, identify where it will connect to the previous component, i.e. where the joint will attach it to the rest of the robot. We want to set up the STL such that this point is at the origin. By doing this we simplify building the XML file later. For the base of the robot this would simply be the bottom of the link, centered.

The easiest way to move the object to the origin is to click a point on the object with the move tool, type [0, 0, 0], and hit enter. This will move the selected point to the origin.

If your object is asymmetrical or you want to center on a point that is difficult to select with your mouse, you can use the measurement tool to set some guidelines. Setting two intersecting lines can help greatly in finding the center of an object (see dotted lines in figure).

Measure the offset distance for the next link

Once the component is appropriately placed at the origin, the next thing we need is the distance to the next attachment point from the origin.

The screenshots below show an example of drawing a verticle guideline to make selecting the next attachment point easy.

When the next attachment point is selected, the Measurements box will display its coordinates. We will need to know all of the offset distances for each component for building the XML file, so make sure you take note!

Some measuring tips

- Press and hold a directional button while measuring to lock an axis. The up arrow locks the z (blue) axis, the left arrow locks the y (green) axis, and the right arrow locks the x (red) axis.

- The measuring tape line changes color to match an axis colors when it is parallel to any axis.

- Notes on how to use the Tape Measure tool effectively

Building your model XML

The easiet way to put together your robot is to build your model up one joint and component at a time, running the simulation to make sure that it works as expected, and then adding the next piece. You will be tempted to assemble a bunch of the model all at once, but this is the siren’s song. You must be strong and resist!

For testing, we created the basic script below, which uses the ABR Control library. To check alignment you can press the space bar to pause the simulation, if you want to make the arm to move then you can change the script to send in some small value instead of zeros.

import mujoco_py as mjp

import glfw

import numpy as np

from abr_control.interfaces.mujoco import Mujoco

from abr_control.arms.mujoco_config import MujocoConfig

robot_config = MujocoConfig(xml_file='example.xml', folder='.')

interface = Mujoco(robot_config, dt=0.001)

interface.connect()

try:

while True:

if interface.viewer.exit:

glfw.destroy_window(interface.viewer.window)

break

interface.send_forces(np.zeros(robot_config.N_JOINTS))

finally:

interface.disconnect()

To run this script with your model, place it in the same directory as your XML file, with a folder called ‘meshes’ that has all of your STLs. If you call your XML file something other than ‘example.xml’ be sure to change that in the script above.

XML model parts

When you’re building your XML file, you’re going to want to have the Mujoco XML Reference constantly open. It’s super detailed and thorough. You can also check out this basic template file on my GitHub which shows the different parts of the file (you will need to fill this out with your own STLs and measurements).

Inside the standard definition tags, the main parts that we’ll be using are:

<asset>– where you import your STLs using the<mesh>tag<worldbody>– where all of the objects in the simulation are defined using<body>tags, including lights, the floor, and of course your robot<acutator>– where you specify which joints defined in your robot can be actuated. The ordering of these joints is important, it should be the same as their order in the kinematic tree (i.e. start with joint0 up to the last joint.)

Body building

The meat of the file is of course in the sequence of <body> tags that define the robot model. Each section of the robot looks like this:

<body name="link_n" pos="0 0 0">

<joint name="joint_n-1" axis="0 0 0" pos="0 0 0"/>

<geom name="link_n" type="mesh" mesh="shoulder" pos="0 0 0" euler="0 0 0"/>

<inertial pos="0 0 0" mass="0.01" diaginertia="0 0 0"/>

<!-- nest the next joint here -->

</body>

Set body position and geoms

Set the offset from the previous body in the pos attribute on the <body> tag, instead of on the geoms. On the <joint> you can then set pos="0 0 0" which we’ve found to help simplify debugging later on.

In each body section you can have several <joint>s and <geom>s. Geoms defined on the same body will be fused together. If you have specific inertia properties for several geoms that are fused together, you will have to create a <body> for each to be able to instantiate their own <inertial> tag. Otherwise, it’s recommended to put them all in the same <body> to optimize simulation speed.

Orientation and inertia

You may need to rotate your STLs to align them properly with the rest of the robot as you build. You can do this either in the <body> or <geom> tags. If you are using an <inertial> tag for this body, we recommend you do this using the euler parameter inside the <geom> tag, instead of inside the <body> tag. If you specify rotation in the <body> tag, you also need to apply the same rotation to your <inertia> parameters, which complicates things.

If you don’t provide an <inertial> tag, the inertia properties will be inferred from the geom.

Contype and conaffinity

If you have geoms that you don’t want to collide with other parts in the model, you can set the contype and conaffinity parameters on the geom tags. This can be handy if you have a tightly-fit 3D model an run into issues with friction, we do this on the UR5 model in the ABR Control library.

End-effector tag for ABR Control

If you’re going to use the ABR Control library operational space controller, you’ll need to add a tag <body name="EE" pos="0 0 0"> at the point of the robot that you want to control. Usually the hand.

Once you’ve added on your robot body segment, save the XML and run the above python script to test out the set up. You will likely need to do some fine tuning by iteratively adjusting the parameters and viewing the model in Mujoco. We’ve found commenting out all of the joints except the joint of interest in the XML file makes assessment much easier.

Template XML file

<mujoco model="example">

<!-- set some defaults for units and lighting -->

<compiler angle="radian" meshdir="meshes"/>

<!-- import our stl files -->

<asset>

<mesh file="base.STL" />

<mesh file="link1.STL" />

<mesh file="link2.STL" />

</asset>

<!-- define our robot model -->

<worldbody>

<!-- set up a light pointing down on the robot -->

<light directional="true" pos="-0.5 0.5 3" dir="0 0 -1" />

<!-- add a floor so we don't stare off into the abyss -->

<geom name="floor" pos="0 0 0" size="1 1 1" type="plane" rgba="1 0.83 0.61 0.5"/>

<!-- the ABR Control Mujoco interface expects a hand mocap -->

<body name="hand" pos="0 0 0" mocap="true">

<geom type="box" size=".01 .02 .03" rgba="0 .9 0 .5" contype="2"/>

</body>

<!-- start building our model -->

<body name="base" pos="0 0 0">

<geom name="link0" type="mesh" mesh="base" pos="0 0 0"/>

<inertial pos="0 0 0" mass="0" diaginertia="0 0 0"/>

<!-- nest each child piece inside the parent body tags -->

<body name="link1" pos="0 0 1">

<!-- this joint connects link1 to the base -->

<joint name="joint0" axis="0 0 1" pos="0 0 0"/>

<geom name="link1" type="mesh" mesh="link1" pos="0 0 0" euler="0 3.14 0"/>

<inertial pos="0 0 0" mass="0.75" diaginertia="1 1 1"/>

<body name="link2" pos="0 0 1">

<!-- this joint connects link2 to link1 -->

<joint name="joint1" axis="0 0 1" pos="0 0 0"/>

<geom name="link2" type="mesh" mesh="link2" pos="0 0 0" euler="0 3.14 0"/>

<inertial pos="0 0 0" mass="0.75" diaginertia="1 1 1"/>

<!-- the ABR Control Mujoco interface uses the EE body to -->

<!-- identify the end-effector point to control with OSC-->

<body name="EE" pos="0 0.2 0.2">

<inertial pos="0 0 0" mass="0" diaginertia="0 0 0" />

</body>

</body>

</body>

</body>

</worldbody>

<!-- attach actuators to joints -->

<actuator>

<motor name="joint0_motor" joint="joint0"/>

<motor name="joint1_motor" joint="joint1"/>

</actuator>

</mujoco>

If you want to use the above template XML file with the example Mujoco simulation script withot having to create your own STLs, you can download the meshes folder from ABR Control’s Jaco 2 model to get going. The placement of things is wildly inaccurate but it’ll get you going. You can also checkout the abr_control/arms/jaco2/jaco2.xml and abr_control/arms/ur5/ur5.xml files for more examples.

Hopefully this is enough to get you started, if you run into questions you can post below or on the Mujoco forums. Happy modeling!

Troubleshooting

SketchUp – I’m importing my model but I can’t see it

It is likely that the units selected during the import were incorrect and the object is simply too small to see. When opening your STL, in the open file window select your file and click on the options button next to Import to change the units.

Mujoco – My arm isn’t moving, or moves slightly and stops

In this case you likely have collisions between links. You can either add a small gap between links (make sure to account for this shift in subsequent links and joints), or you can use the contype and conaffinity tags to set up the model such that collisions between the two components are not calculated.

For example, in our UR5 model, we set the touching geoms between links to have different contype and conaffinity values so that they don’t scrape against one another and prevent movement.

Mujoco – Parts of my model are spinning around wildly

This usually arises from being instantiated in contact with another part of the model. Sometimes it looks like there is clearly no contact between different model segments, but in fact there is because of how the contact dynamics are calculated.

Only convex shapes are supported. To see the shapes being used to calculate the contact dynamics, press F1 while the model is running.

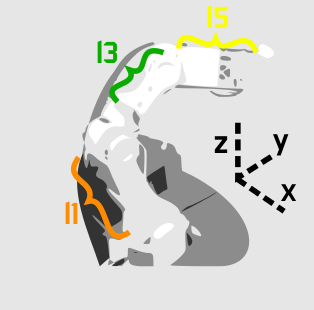

How the STL looks when it’s loaded, lots of space between the neck and jaw The actual shapes that are used for calculating contact dynamics.

There is no space between the neck and jaw. To address this, you need break things up into multiple component STL files and then stitch them together in the XML. For example, in the above skeleton you would need to break it up into skull and spine STLs. NB: I will not be answering any questions about why a skeleton was used in this example.