In my previous post about reinforcement learning I talked about Q-learning, and how that works in the context of a cat vs mouse game. I mentioned in this post that there are a number of other methods of reinforcement learning aside from Q-learning, and today I’ll talk about another one of them: SARSA. All the code used is from Terry Stewart’s RL code repository, and can be found both there and in a minimalist version on my own github: SARSA vs Qlearn cliff. To run the code, simply execute the cliff_Q or the cliff_S files.

SARSA stands for State-Action-Reward-State-Action. In SARSA, the agent starts in state 1, performs action 1, and gets a reward (reward 1). Now, it’s in state 2 and performs another action (action 2) and gets the reward from this state (reward 2) before it goes back and updates the value of action 1 performed in state 1. In contrast, in Q-learning the agent starts in state 1, performs action 1 and gets a reward (reward 1), and then looks and sees what the maximum possible reward for an action is in state 2, and uses that to update the action value of performing action 1 in state 1. So the difference is in the way the future reward is found. In Q-learning it’s simply the highest possible action that can be taken from state 2, and in SARSA it’s the value of the actual action that was taken.

This means that SARSA takes into account the control policy by which the agent is moving, and incorporates that into its update of action values, where Q-learning simply assumes that an optimal policy is being followed. This difference can be a little difficult conceptually to tease out at first but with an example will hopefully become clear.

Mouse vs cliff

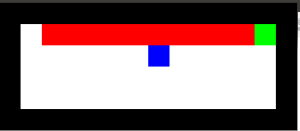

Let’s look at a simple scenario, a mouse is trying to get to a piece of cheese. Additionally, there is a cliff in the map that must be avoided, or the mouse falls, gets a negative reward, and has to start back at the beginning. The simulation looks something like exactly like this:

where the black is the edge of the map (walls), the red is the cliff area, the blue is the mouse and the green is the cheese. As mentioned and linked to above, the code for all of these examples can be found on my github (as a side note: when using the github code remember that you can press the page-up and page-down buttons to speed up and slow down the rate of simulation!)

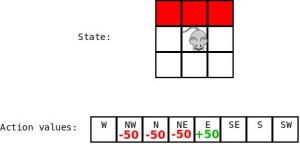

Now, as we all remember, in the basic Q-learning control policy the action to take is chosen by having the highest action value. However, there is also a chance that some random action will be chosen; this is the built-in exploration mechanism of the agent. This means that even if we see this scenario:

There is a chance that that mouse is going to say ‘yes I see the best move, but…the hell with it’ and jump over the edge! All in the name of exploration. This becomes a problem, because if the mouse was following an optimal control strategy, it would simply run right along the edge of the cliff all the way over to the cheese and grab it. Q-learning assumes that the mouse is following the optimal control strategy, so the action values will converge such that the best path is along the cliff. Here’s an animation of the result of running the Q-learning code for a long time:

The solution that the mouse ends up with is running along the edge of the cliff and occasionally jumping off and plummeting to its death.

However, if the agent’s actual control strategy is taken into account when learning, something very different happens. Here is the result of the mouse learning to find its way to the cheese using SARSA:

Why, that’s much better! The mouse has learned that from time to time it does really foolish things, so the best path is not to run along the edge of the cliff straight to the cheese but to get far away from the cliff and then work its way over safely. As you can see, even if a random action is chosen there is little chance of it resulting in death.

Learning action values with SARSA

So now we know how SARSA determines it’s updates to the action values. It’s a very minor difference between the SARSA and Q-learning implementations, but it causes a profound effect.

Here is the Q-learning learn method:

def learn(self, state1, action1, reward, state2):

maxqnew = max([self.getQ(state2, a) for a in self.actions])

self.learnQ(state1, action1,

reward, reward + self.gamma*maxqnew)

And here is the SARSA learn method

def learn(self, state1, action1, reward, state2, action2):

qnext = self.getQ(state2, action2)

self.learnQ(state1, action1,

reward, reward + self.gamma * qnext)

As we can see, the SARSA method takes another parameter, action2, which is the action that was taken by the agent from the second state. This allows the agent to explicitly find the future reward value, qnext, that followed, rather than assuming that the optimal action will be taken and that the largest reward, maxqnew, resulted.

Written out, the Q-learning update policy is Q(s, a) = reward(s) + alpha * max(Q(s')), and the SARSA update policy is Q(s, a) = reward(s) + alpha * Q(s', a'). This is how SARSA is able to take into account the control policy of the agent during learning. It means that information needs to be stored longer before the action values can be updated, but also means that our mouse is going to jump off a cliff much less frequently, which we can probably all agree is a good thing.

thank you for your brief and explicit notes, that was helpful!

but i have a question, do you claiming that Sarsa is Better than QLearning in any aspect?!

suppose you have little information about environment and have a little time , e.g. you have few number of episodes to live in Environment, which one you choose for your agent? Sarsa or Qlearning?

Thanks! Glad you enjoyed them.

I wouldn’t say that SARSA is is better than Q-learning in every aspect, but the benefit of it is that it takes into account what your actual system policy, rather than just assuming that you’re doing the right thing. But if you have a system where your policy is changing a lot it could be much more desirable to use the Q-learning approach and learn assuming that you’re moving optimally, and then incorporate that however you will, rather than having a SARSA system that tries to account for your constant changing movement policy.

In a scenario where you only have a few episodes for learning there’s no real difference between the two, as neither was built to address that situation more efficiently than the other.

Thank you, Glad to find you!;)

but i was thinking QLearning may be better in this scenario, because something that you mentioned above:

” But if you have a system where your policy is changing a lot it could be much more desirable to use the Q-learning approach and learn assuming that you’re moving optimally, and then incorporate that however you will, rather than having a SARSA system that tries to account for your constant changing movement policy”

i think in very first episodes the agents knowledge is too few, so it will changes its policy too much, so in first episodes QLearning may be better, in most of problems, how ever this may depend on very other things in general, specially Environment properties.

in other words i guess QLearning agent will find a sub optimal policy faster,and slowly will changes it toward the optimal policy, but SARSA will find a better policy after a few steps more, when it finds a little knowledge about Environment. how ever i have not enough implementations to test this, i have tested that on one problem and that seems to be true.

what do you think about this?!

I see, I think you might right that you will get a slightly better set of Q-values out of Q-learning rather than SARSA. This could be because Q-learning is ‘looking into the future’ during Q-value assignment (what is the reward from the best possible path from here), whereas SARSA is assigning Q-values based on much more immediate information (what is the reward from what I just did).

That’s not to say that this is because the control policy is changing (it would be the same policy of greedy search with chance of exploration the whole time), but because the mouse ‘gets scared’ of the cliff and takes longer to find a path to the cheese.

So I’ve convinced myself that this makes sense, but it would be a neat thing to test for a small project! Wouldn’t required much modification of the code already there, please let me know if you run it what the results are!

Love the illustration — This post went a long way towards helping me understand the practical differences in these two implementations. Thanks!

That’s great! Glad to hear you enjoyed the post, thanks for the comment!

Thank you for this elaborate explanation!

Reblogged this on Taeyoung Kim and commented:

Things to learn

Great example and animation, it really helped me grasp the difference (or so I hope).

However I have one question or remark.

So in both cases we learn a behavior by experience and then proceed acting based on what we have learned. While learning we try to explore by making stupid decisions now and then, Because sometimes we might learn the decision wasn’t as stupid as we initially thought (maybe there’s more cheese at the bottom of that cliff?)

As seen in the animations the value function found by SARSA assumes we will keep on acting stupid now and then, the value function found by Q-learning assumes we don’t ever act stupid.

But wouldn’t it be beneficial to just stop acting stupid at some point? This obviously depends on the problem we are trying to solve. But say we wanted to teach a robot to bring first aid to wounded humans and avoid mines along the way. We have it learn and experiment for as long as we can in a controlled, non-explosive environment. But once it is actually used in action we prevent it from exploring because we don’t want it to randomly roll into a mine in the name of science.

With Q-learning we actually found the optimal policy of running straight to the cheese while SARSA taught the robot the optimal policy of avoiding its suicidal fits while trying to eventually get to the cheese. The latter would be awful for our no-longer-suicidal first aid robot.

Then again the reward in the mouse example didn’t specify that we were in a hurry. Assuming no time limit or energy expended by moving the SARSA policy is always better as long as we explore and both policies are equally good once we stop exploring.

Hi Marie,

so i’ll rephrase ‘stupid decisions’ as ‘testing out sub-optimal decisions in case our assigned value of a decision is incorrect’. 😀

In my first RL post I explore reducing the chances of randomly choosing a direction for exploration over time, and the results are pretty good! Also, a common practice is to train up the system and then just turn exploration off entirely, like you suggested! So definitely it’s a practice that’s been shown to work well.

Incorporating time / urgency into the problem would be an interesting little project as well, you could have another dimension of the state represent how long the mouse has been around that penalizes large numbers, which would lead to an interesting balance between running alongside the cliff and taking a more conservative / safer path.

But you’re right on both counts!

After reading a lot of stuff about SARSA vs Q-Learning your post made it clear. The quotation that makes the difference clear is:

“In Q-learning it’s simply the highest possible action that can be taken from state 2, and in SARSA it’s the value of the actual action that was taken.

This means that SARSA takes into account the control policy by which the agent is moving, and incorporates that into its update of action values, where Q-learning simply assumes that an optimal policy is being followed”

So my understanding is that SARSA takes a CURRENT POLICY and follows it to calculate the state-action values. So it EVALUATES a non-optimal but current policy. Q-Learning keeps on changing the policy while evaluating it.

Now my questions are:

1. At which point does SARSA explores and attempts to find a better policy?

2. How does SARSA know that it has a good approximation of the State Action values of the policy at-hand (current policy)?

3. Is there a gradient between pure Q-Learning and SARSA?

Hmmm so it’s not quite that Q-Learning changes the policy while evaluating and SARSA doesn’t, they’re both using the most recent information to make decisions. In fact, SARSA even fluctuates a bit more in that sense because it uses the actual trajectory taken to update weights (which may not have been a good decision), rather than Q-learning which just assumes that the best decision is always made. But yes, you’re right SARSA evaluates the current policy rather than assuming an optimal one is being used, like Q-learning does. Alright questions!

1) Both Q-learning and SARSA are for learning information about the environment, and separated from the actual policy that makes decisions. They learn ‘hey if you do this, based on what’s happened before it’s probably about this rewarding’, and then there’s some separate system, uninvolved from the learning that both algorithms do, that defines the control policy and makes the call on which action to take.

2) It does not! It just knows ‘you were there and you went here’, and updates the action values based on this information. It could be a once in a million action but it will update the weights all the same. But as that movement will happen much less than others you’ll end up with a good set of action values given the control policy because as things happen more or less often the associated action values will change accordingly.

3) Hmmm none that I’m aware of! But that’s not saying all too much. It’s a pretty discrete difference between the two. Aside from alternating between Q-learning and SARSA updating nothing comes to mind on combinations.

Hope that helps!

When we turn off exploration, it will no longer make random moves while moving on the cliff right..so when we turn off exploration the policy found by q learning is better..so in what sense are we right to say SARSA has found us a better solution.In the long run dont we want the most optimal policy?

Hi Sridhar,

hmm, so there are a few cases where SARSA comes to a better solution. One is we don’t want to turn off learning, for example if it’s possible that the environment is changing. In that case exploration is always part of our policy and the better solution accounts for that. Other reasons for deviations from the greedy policy (that q-learning assumes) could be that the agent has some restrictions placed upon it (for example: it can’t turn left) or other things that a simple greedy algorithm doesn’t take into account. You q-learner might also not have access to all of the information that the control policy does, so what is a greedy optimization to the q-learner is a very poor action choice for the policy. Hope these examples help clarify benefits of SARSA! 🙂

I want to ask that SARSA can be used for routing the data? If I want to use it as routing protocol in Wireless Sensor Networks then Will it be suitable or possible to implement?

Hi Shiza,

hmm, it’s hard to say, without knowing more about the problem. We’d need to know what the state representation would be, what the possible actions are from each state, what the reward for different state / actions would be. I suspect, however, that SARSA isn’t the best choice for this problem!

In my scenario , I have 50 randomly deployed node with a battery recharging device moving in the WSN environment. With help of that device WSN can remain operational longer, I want to use SARSA for data routing among those nodes so that energy consumption can be reduced. So Can we use it as routing protocol?

Hi Shiza,

hm, so you want to avoid routing data through low-energy nodes? You could set this up as an RL problem, but I’m not sure it’d be a great choice.

To start you’d need to define a state space, possible actions from each state, and a cost function, what would those look like?

yes Exactly, But I am confused How Can I assign the values for state, action…in WSN. Is a node can be a state with the measuring of remaining energy? action can be like to decide which node can send data on the basis of remaining battery capacity? or any other thing m just totaly confused could you please help me?

Hi Shiza,

there’s a bunch of different ways that you could choose to set up the state space and possible actions, etc, it’s often a large part of the solution design. If you want to learn which neighbouring node to send data to you’ll want your state space to incorporate all the information required to make that decision. If you think of your nodes as different grid points on a map that you’re learning about, the initial node as your starting state and the target node as your cheese, then you’re essentially learning the best way to move to the cheese. It’s a more complex problem though, because 1) which node has the cheese is changing, 2) which path is optimal is constantly changing because of the power levels of each node. So in some fashion you probably need to include in your system state information about where the target is and the power levels of the nodes in the map. It expands the state space quite a bit and makes the problem much harder.

Off the top of my head, I would probably make an ego-centric RL agent to learn passing the information to neighbour nodes with high-energy, and an allocentric RL agent to learn the map of the network, and reward passing to nodes that are closer to the target. You could then combine the actions weightings from each to choose which action to take at a given state (which node to send the packet to). Something like that might work! Good luck!

Hi there, thanks for the great posts!

I actually just finished reading the Temporal Difference Chapter of Sutton’s book, so I’m far from an expert in the subject.

Reading the post though, the first thing I thought is this: SARSA’s solution is not finding the optimal solution in the mouse-cheese problem. The optimal policy is to run along the edge, isn’t it?

I’d rather run q-learning until convergence, and when the optimal policy is found, the run-time agent would simply remove the e-greedy part of it and will actually avoid falling into the cliff.

What am I missing here?

Don’t think you’re missing anything, really. You can do what you mention (and many do), but it involves editing your control policy online, and you’ll need to go in and start it up again if the environment changes. What the optimal policy is depends on what control algorithm you’re running, SARSA simply adapts to the control policy that is actually being used, as opposed to assuming that the optimal control policy will be used.

Thanks for the post and thanks for all the replies. It has been very helpful. I am still having trouble and I hope I can ask this question very simply.

Q-Learning and SARSA start out the same way. They greedly take an action on the first state.

Q-learning takes the action that maximizes the q-values for the second state/action pair.

SARSA takes the action that is determined by the policy.

My question lies here:

Isn’t for both cases the policy defined by the q-values? Q-learning is following a policy by taking the action that maximized the q-values for the second state/action pair. SARSA’s policy is defined by the q-values we are learning and that policy would naturally be determined by the maximum state/action pairs.

It seems like a chicken and the egg problem. SARSA needs a policy to follow but that policy is determined by the q-values. The q-values defines a policy by taking the maximum action for a state/action pair.

Hello! I think I understand the confusion; there are two parts here.

1) Q-learning and SARSA are different ways of _updating_ the Q-values. The control policy is entirely independent of how the q-values are updated. The difference between Q-learning and SARSA is that Q-learning makes an assumption about the control policy being used, and SARSA actually takes into account the behaviour of the control policy when updating q-values.

2) For the chicken and egg part, i’m not sure that’s the best way to think about it. This is more a trial/error situation, where we can address the problem and improve performance by iterating. You start out with a poor model of the environment, act on that, see the results, improve the model, repeat. Our control policy rules are fully defined at the beginning, and behaviour only changes because the q-values change (as you say). Does that help?

[…] 区别辨析,直观易懂:Reinforcement Learning part 2: SARSA vs Q-learning […]

Hi,

Thanks for your good and informative post. I have a small question: Let’s assume that wusing past data of the system we were able to calculate T, R , and P and by solving an MDP we got the optimal policy in each state as a result.

Now, assume that the environment is changing slowly and the optimal policy might not remain optimal anymore. Hence, I am looking for an algorithm to update Q value, and policy online. However, I do not want to act randomly because I know that I am already close to optimal policy and also acting randomly might makes problem for me.

So in your opinion, which one of these two algorithms ( or maybe sth else) can be better to update the current near optimal policy?

Thanks,

Assuming that the near optimal policy you’re using is a function of learned or calculated Q-values…Starting from an already learned policy I think you would want to use SARSA, and you should just be able to take out the exploratory noise from the system. This will mean that the controller follows your specified near optimal controller until things behave unexpectedly. At which point it will update the Q-values, and your system will search around a bit to find another controller. Without the exploratory noise though you will miss out on searching for a local minima as the environment updates.

Hi,

Great explanation about the difference!

I just have one question about Q-learning implementation. There are two kind of Q-learning algorithm. The older version, which is what you did in “cliff_Q.py” line 50, selecting the next action before update Q-value. Another one is just update the value and select next action in next iteration. I just want to know, is there any difference between those two versions, which one is correct? Thanks.

Hi Guang,

good question! So, for Q-learning, you’re updating the Q-values of the previous state, and then selecting an action for the current state, so you can choose an action and update or update and then choose an action, the update to the last state’s Q-values won’t have any effect on the action chosen in the current state.

Cheers,

[…] 这里有一篇比课件上更好的解释:https://studywolf.wordpress.com/2013/07/01/reinforcement-learning-sarsa-vs-q-learning/ […]

[…] where the agent interacts with the environment and updates the policy based on actions taken. This post will give additional interesting insights about model-free […]

So if I got this correctly, the difference between Q-learning and SARSA is that SARSA will try to expand the tree of possible states (s’) as much as possible, in order to get a solution from a wider state space, while Q-learning tries to expand the search tree in a more minimal fashion, and relying more on heuristics to find a good solution.

Hmmm, i don’t think that’s quite right. SARSA will update the expected reward of each state based on the actual control policy (which may be sub-optimal) where Q-learning updates the expected reward of each state assuming that the controller will always pick the optimal action. So SARSA will account for the chance that the controller sometimes unexpectedly jumps off the ledge, and you should stay away from the ledge, where Q-learning never will. Does that help?